Right now, the dominant paradigm for connecting agents to the world runs through tool-calling protocols. MCP (Model Context Protocol) gives agents access to data sources and APIs. A2A (Agent-to-Agent Protocol) lets agents delegate tasks to each other. These are important and necessary layers. But they share a fundamental assumption: that agents operate on behalf of users, executing tasks and returning results. The interaction model is transactional — request in, response out.

This works well for a lot of things. But it completely breaks down when you want an agent to work alongside you in a shared space.

The Collaboration Gap

Think about what happens when you're on a video call with a colleague, working on a map together. They can point at a region. They can draw a circle around an area of interest. They can drag a data layer on and off. You can see their cursor moving, which tells you where their attention is before they say anything. The collaboration is spatial, continuous, and ambient, not a series of discrete requests and responses.

Now think about how AI agents currently interact with spatial interfaces like maps. An agent might call an API to add a GeoJSON layer. It might execute a filter query and return results. It might generate code that renders a visualization. In every case, the agent is operating behind the glass, reaching into the interface through tool calls, never actually inhabiting it. You can't see where it's "looking." It can't point at something. It can't sketch an annotation while you watch.

This isn't a minor UX complaint. Research on human-AI collaboration in shared workspaces suggests that implicit spatial communication, such as cursor movement, drawing, and co-manipulation of shared objects, may actually be more effective than explicit verbal communication. A 2024 study on Mutual Theory of Mind in human-AI teams found that bidirectional text communication actually decreased team performance, while the agent's spatial awareness and action-based coordination enhanced the human's understanding of the agent and their feeling of being understood.

The collaboration patterns we need already have names in the multiplayer software world: presence, awareness, shared cursors, and conflict-free replication. The problem is that nobody has connected these patterns to how agents join applications.

What Exists Today

The closest anyone has come to solving this is in the infinite canvas space. Matt Webb, during a 2023 residency at PartyKit, built AI "NPCs" (non-player characters) that joined tldraw whiteboards as legitimate multiplayer participants. His agents connected to the same Yjs collaboration room as human users, with their own cursors, names, and presence states. He developed a concept he called cursor proxemics, using the distance of an AI's cursor to convey attention and confidence, much like physical proximity conveys engagement in human interaction.

tldraw has since formalized some of this in their Agent Starter Kit, which gives AI agents a dual representation of the canvas state visual screenshots and structured shape data along with the ability to create, modify, and arrange elements on the canvas in real time.

Fermat, a collaborative canvas startup, explored what they called spatial querying: language model operations that function as movable zones on a 2D canvas, affecting whatever content enters their area. A sentiment analysis block, for instance, could be dragged around the document, automatically coloring text blocks based on their sentiment as they enter its radius. The AI operation becomes a spatial object you manipulate, not a menu item you invoke.

On the academic side, the Collaborative Gym framework (Stanford, Dec 2024) formalized the concept of agents and humans sharing a workspace with asynchronous, non-turn-taking interaction. Their findings showed that collaborative agents consistently outperformed fully autonomous ones across travel planning, data analysis, and research tasks.

These are all promising signals. But they're isolated experiments, not shared infrastructure.

The Protocol Gap

Here's the architectural picture:

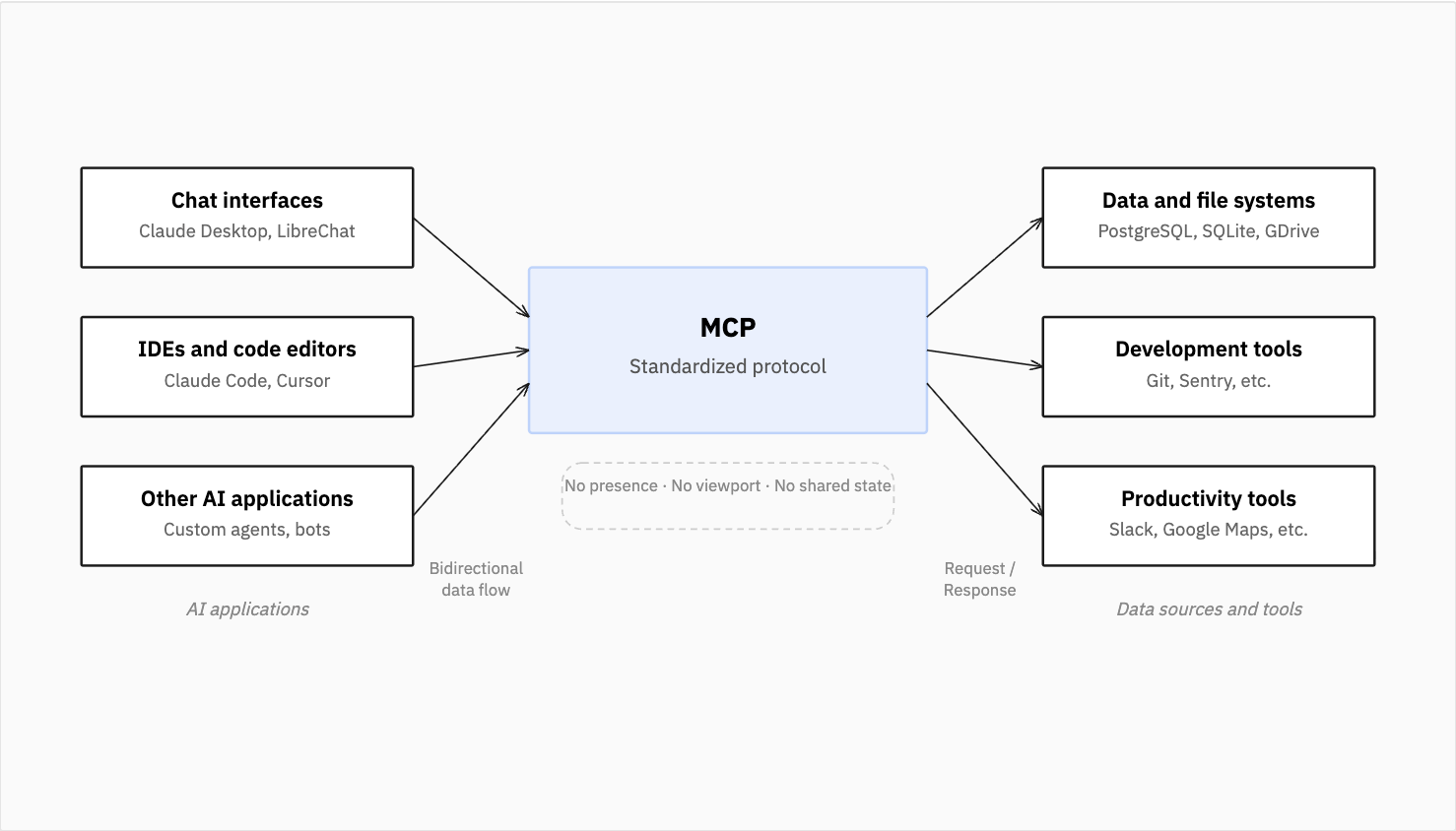

Connects agents to tools and data (vertical integration). It's request/response — the agent calls a function, gets a result. No concept of presence, viewport, or continuous shared state.

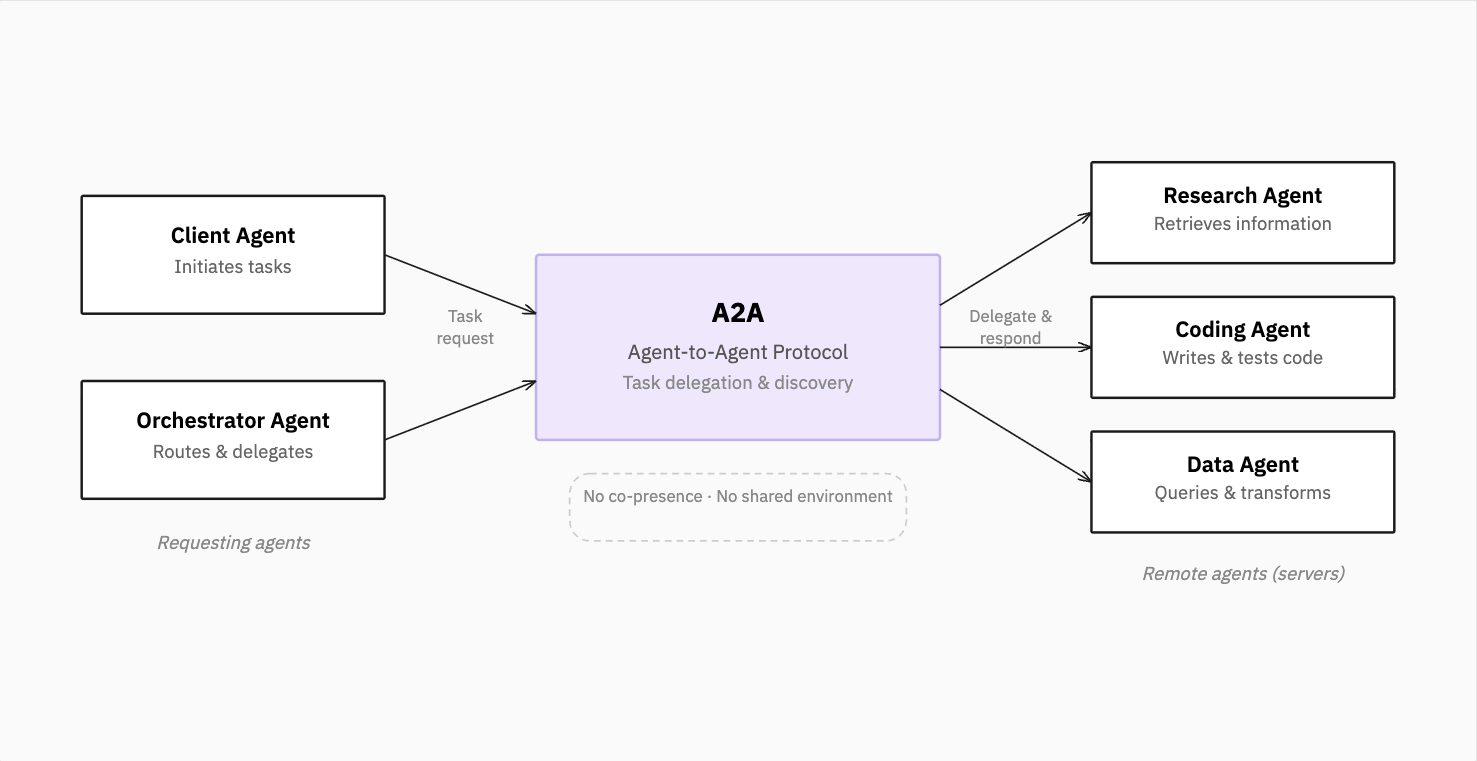

Connects agents to other agents (horizontal integration). It's task-oriented — one agent delegates work to another. Still no concept of co-presence in a shared environment.

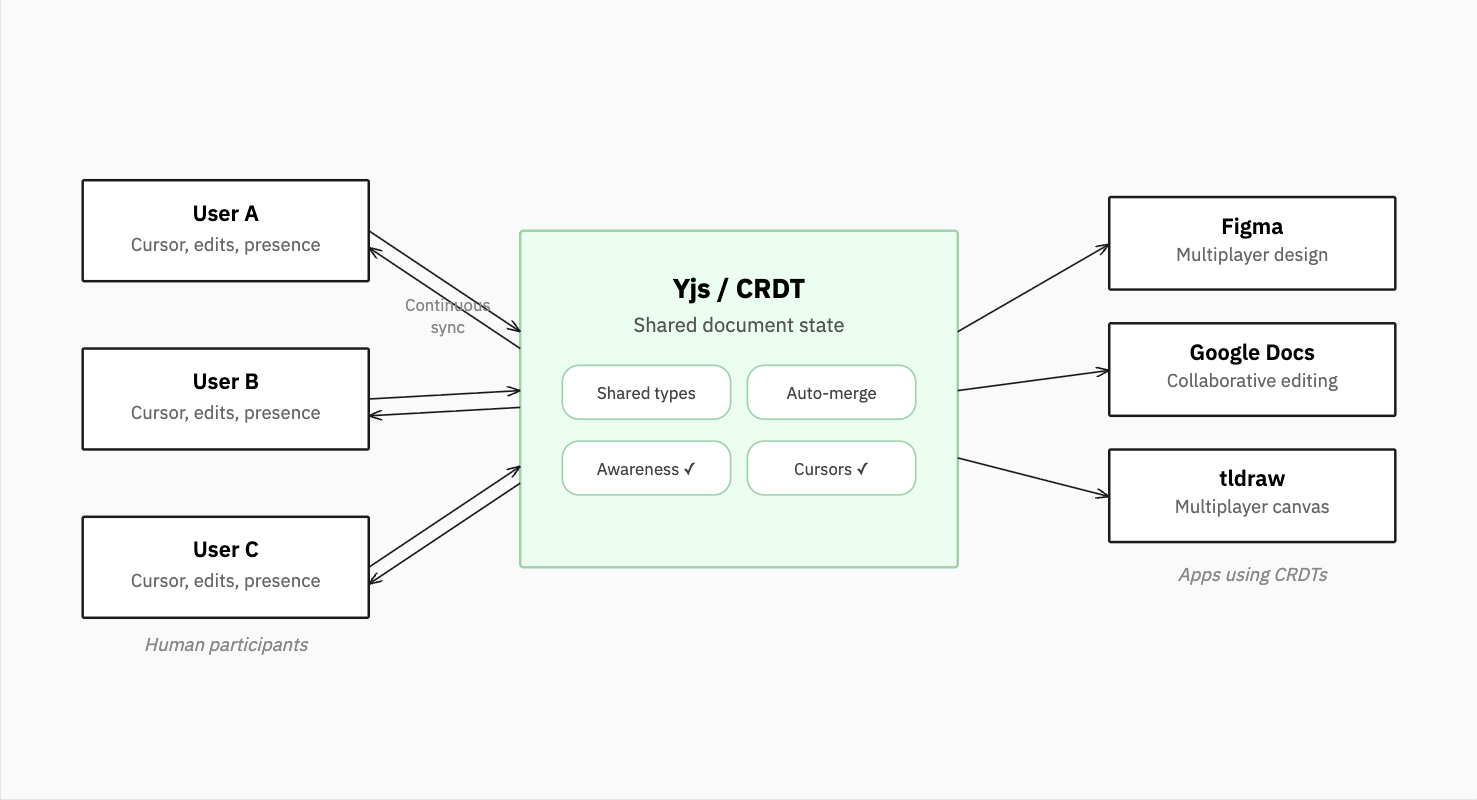

Provide the actual infrastructure for real-time shared state — concurrent editing, automatic merge, conflict resolution, and crucially, an awareness protocol that propagates ephemeral state like cursor positions and user status. This is what tldraw, Figma, and Google Docs use for multiplayer. It already supports the interaction patterns we need.

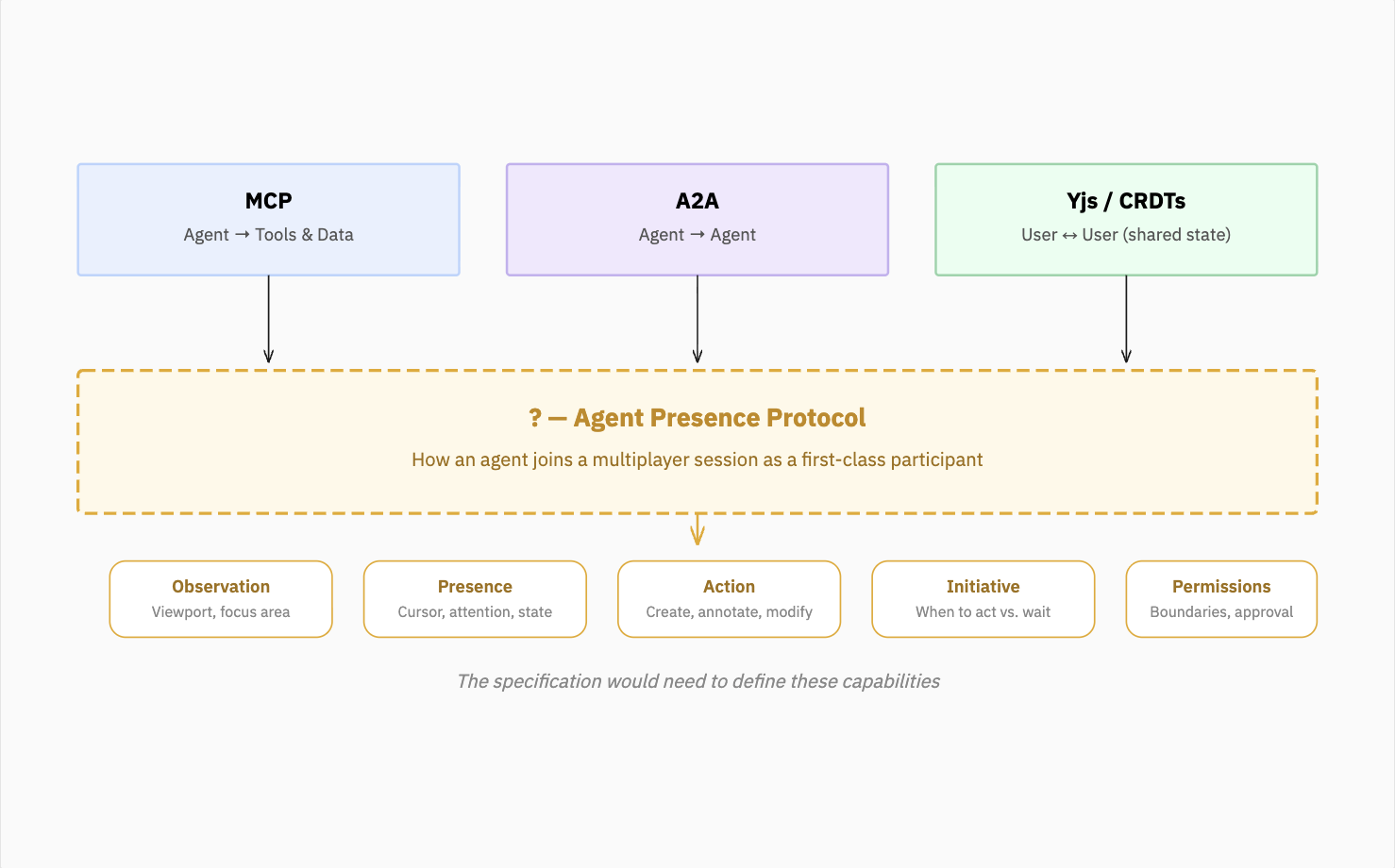

What's missing is the bridge: a specification for how an agent joins a multiplayer session as a first-class participant. Not as a tool-caller. Not as a code generator. As a collaborator with presence, spatial awareness, and the ability to co-manipulate shared state.

This specification would need to define:

- How an agent observes — what subset of the shared document state it receives, and at what fidelity (viewport, zoom level, focus area)

- How an agent signals presence — cursor position, attention indicators, confidence levels, idle vs. active states

- How an agent acts — creating, modifying, annotating, or highlighting elements within the shared state, using the same operations available to human participants

- How an agent negotiates initiative — when to act independently, when to propose changes, when to wait for human direction

- How permissions and boundaries work — what regions or layers the agent can modify, what requires human approval

Why Maps Are the Right Starting Point

Maps are a natural first domain for this work, for a few reasons. They're inherently spatial — collaboration on a map is already about shared viewports, annotations, and layered data. They have a clear interaction vocabulary — pan, zoom, draw, filter, select — that both humans and agents can share. And the data they work with (sensor feeds, environmental data, satellite imagery, infrastructure records) increasingly comes from sources that agents are well-positioned to query and synthesize.

Imagine working with an environmental dataset on a map. You're exploring air quality readings across a region. An agent that has presence in the same map could: highlight anomalous readings as you pan across them, draw attention boundaries around areas where sensor coverage is sparse, annotate correlations between data layers you've loaded, or sketch a suggested monitoring route based on the gaps it's identified — all while you watch and redirect in real time.

This isn't the agent performing analysis behind the scenes and returning a report. It's the agent thinking alongside you, spatially, in the same medium you're both looking at.

What Needs to Be Built

The building blocks exist. Yjs provides shared state and awareness. MCP provides data access. Map libraries like MapLibre provide the spatial rendering substrate. What's needed is the integration layer: an open specification and reference implementation that connects an AI agent to a collaborative spatial interface as a first-class participant.

This is infrastructure work. It's the kind of thing that should be open source, open protocol, and built in the interest of user agency because the alternative is every platform building its own proprietary version, with different assumptions about what agents can see, do, and access.

The future of human-AI collaboration isn't agents that do things for you. It's agents that do things with you, in the same space, at the same time. We have the protocols for giving agents tools. We have the protocols for giving agents data. What we don't yet have is the protocol for giving agents presence.

That's the layer worth building.